仅用于站内搜索,没有排版格式,具体信息请跳转上方微信公众号内链接

Datawhale分享

必读论文:LLM/AI,编辑:深度学习自然语言处理

项目地址:https ://github. com/InterviewReady/ai-engineering-resources

Byte-pairEncodinghttps ://arxiv. org/pdf/1508. 07909

ByteLatentTransformer:PatchesScaleBetterThanTokenshttps ://arxiv. org/pdf/2412. 09871

IMAGEBIND:OneEmbeddingSpaceToBindThemAllhttps ://arxiv. org/pdf/2305. 05665

FAISSlibraryhttps ://arxiv. org/pdf/2401. 08281

FacebookLargeConceptModelshttps ://arxiv. org/pdf/2412. 08821v2

TensorFlowhttps ://arxiv. org/pdf/1605. 08695

Deepseekfilesystemhttps ://github. com/deepseek-ai/3FS/blob/main/docs/design_notes. md

MilvusDBhttps ://www. cs.purdue. edu/homes/csjgwang/pubs/SIGMOD21_Milvus. pdf

BillionScaleSimilaritySearch:FAISShttps ://arxiv. org/pdf/1702. 08734

Rayhttps ://arxiv. org/abs/1712. 05889

AttentionisAllYouNeedhttps ://papers. neurips.cc/paper/7181-attention-is-all-you-need. pdf

FlashAttentionhttps ://arxiv. org/pdf/2205. 14135

MultiQueryAttentionhttps ://arxiv. org/pdf/1911. 02150

GroupedQueryAttentionhttps ://arxiv. org/pdf/2305. 13245

GoogleTitansoutperformTransformershttps ://arxiv. org/pdf/2501. 00663

VideoRoPE:RotaryPositionEmbeddinghttps ://arxiv. org/pdf/2502. 05173

Sparsely-GatedMixture-of-ExpertsLayerhttps ://arxiv. org/pdf/1701. 06538

GShardhttps ://arxiv. org/abs/2006. 16668

SwitchTransformershttps ://arxiv. org/abs/2101. 03961

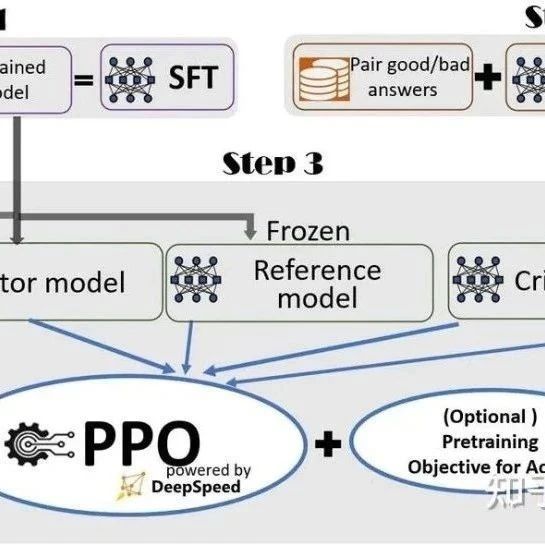

DeepReinforcementLearningwithHumanFeedbackhttps ://arxiv. org/pdf/1706. 03741

Fine-TuningLanguageModelswithRHLFhttps ://arxiv. org/pdf/1909. 08593

TraininglanguagemodelswithRHLFhttps ://arxiv. org/pdf/2203. 02155

Chain-of-ThoughtPromptingElicitsReasoninginLargeLanguageModelshttps ://arxiv. org/pdf/2201. 11903

Chainofthoughthttps ://arxiv. org/pdf/2411. 14405v1/

DemystifyingLongChain-of-ThoughtReasoninginLLMshttps ://arxiv. org/pdf/2502. 03373

TransformerReasoningCapabilitieshttps ://arxiv. org/pdf/2405. 18512

Scalemodeltesttimesisbetterthanscalingparametershttps ://arxiv. org/pdf/2408. 03314

TrainingLargeLanguageModelstoReasoninaContinuousLatentSpacehttps ://arxiv. org/pdf/2412. 06769

DeepSeekR1https ://arxiv. org/pdf/2501. 12948v1

LatentReasoning:ARecurrentDepthApproachhttps ://arxiv. org/pdf/2502. 05171

TheEraof1-bitLLMs:AllLargeLanguageModelsarein1. 58Bitshttps ://arxiv. org/pdf/2402. 17764

ByteDance1. 58https ://arxiv. org/pdf/2412. 18653v1

TransformerSquarehttps ://arxiv. org/pdf/2501. 06252

1boutperforms405bhttps ://arxiv. org/pdf/2502. 06703

SpeculativeDecodinghttps ://arxiv. org/pdf/2211. 17192

DistillingtheKnowledgeinaNeuralNetworkhttps ://arxiv. org/pdf/1503. 02531

BYOL-DistilledArchitecturehttps ://arxiv. org/pdf/2006. 07733

DINOhttps ://arxiv. org/pdf/2104. 14294

RWKV:ReinventingRNNsfortheTransformerErahttps ://arxiv. org/pdf/2305. 13048

Mambahttps ://arxiv. org/pdf/2312. 00752

DistillingTransformerstoSSMshttps ://arxiv. org/pdf/2408. 10189

LoLCATs:OnLow-RankLinearizingofLargeLanguageModelshttps ://arxiv. org/pdf/2410. 10254

ThinkSlow,Fasthttps ://arxiv. org/pdf/2502. 20339

GoogleMathOlympiad2https ://arxiv. org/pdf/2502. 03544

CompetitiveProgrammingwithLargeReasoningModelshttps ://arxiv. org/pdf/2502. 06807

GoogleMathOlympiad1https ://www. nature.com/articles/s41586-023-06747-5

CanAIbemadetothinkcriticallyhttps ://arxiv. org/pdf/2501. 04682

EvolvingDeeperLLMThinkinghttps ://arxiv. org/pdf/2501. 09891

LLMsCanEasilyLearntoReasonfromDemonstrationsStructurehttps ://arxiv. org/pdf/2502. 07374

Separatingcommunicationfromintelligencehttps ://arxiv. org/pdf/2301. 06627

Languageisnotintelligencehttps ://gwern. net/doc/psychology/linguistics/2024-fedorenko. pdf

Imageis16x16wordhttps ://arxiv. org/pdf/2010. 11929

CLIPhttps ://arxiv. org/pdf/2103. 00020

deepseekimagegenerationhttps ://arxiv. org/pdf/2501. 17811

ViViT:AVideoVisionTransformerhttps ://arxiv. org/pdf/2103. 15691

JointEmbeddingabstractionswithself-supervisedvideomaskshttps ://arxiv. org/pdf/2404. 08471

FacebookVideoJAMaigenhttps ://arxiv. org/pdf/2502. 02492

AutomatedUnitTestImprovementusingLargeLanguageModelsatMetahttps ://arxiv. org/pdf/2402. 09171

OpenAIo1SystemCardhttps ://arxiv. org/pdf/2412. 16720

LLM-poweredbugcatchershttps ://arxiv. org/pdf/2501. 12862

Chain-of-RetrievalAugmentedGenerationhttps ://arxiv. org/pdf/2501. 14342

SwarmbyOpenAIhttps ://github. com/openai/swarm

ModelContextProtocolhttps ://www. anthropic.com/news/model-context-protocol

uberqueryGPThttps ://www. uber.com/en-IN/blog/query-gpt/